Running Hybrid and Multicloud Containers with Google Anthos

- January 22, 2021

In recent years, enterprises have realised that not every workload should run in the public cloud. Google Anthos offers a platform to deploy and manage containerised workloads across different cloud infrastructures.

Authored by:

Kent Hua, Customer Engineer - App Modernization Specialist, Google

James McElvanna, Solutions Architect, Megaport

Nick De Cristofaro, Networking Specialist Customer Engineer, Google

James Ronneberg, Partner Development Manager (Cloud), Megaport

Introduction

In the early days of cloud computing, the narrative from the CSPs was that nearly everything would be run in the public cloud, but as Warren Buffett wisely said, “Don’t ask the barber if you need a haircut.” In recent years, enterprises have realised that not every workload can or should be run in the public cloud. Most have split services and applications between on-prem and private or public clouds based on criteria such as cost, latency, security and compliance.

CSPs Move Towards On-prem

The major cloud providers acknowledged this trend, and in 2017, Microsoft introduced Azure Stack , which allowed customers to run Azure in their own data centres using approved partners and hardware (e.g. Dell, HPE, Lenovo, and others). AWS announced Outposts , a similar solution that runs on AWS kit and is supported and managed by AWS directly. Around the same period, we also saw the traditional on-prem players such as Oracle, HPE, IBM, SAP, Nutanix, and NetApp all begin to push either their own cloud services or partner with the Big Three CSPs.

Anthos

In 2019, Google introduced Anthos , which, at first glance, may appear very similar to Azure Stack and Outposts, however it’s fundamentally different. Anthos was designed to help customers modernise legacy applications with Kubernetes, a system for automating containerised application deployment, open-sourced by Google. Rather than a pure on-prem hardware play, Anthos is more like a platform to deploy and manage containerised workloads across different cloud infrastructures.

Unlike Outposts (AWS-owned hardware) or Stack (Azure approved OEMs), Google Anthos can run on virtualised infrastructure – public CSPs or hypervisor-based on-prem. Earlier this year, they announced support for bare-metal deployments, thereby removing the need for a hypervisor layer. This enables enterprises to improve performance – by running workloads closer to the hardware – and also takes advantage of existing hardware investments.

Learn more about Google Anthos .

Containers

To fully understand Anthos, it’s helpful to go deeper into Kubernetes and the early days of containers. Virtualisation and hypervisors provide a way to abstract away from the hardware and quickly deploy multiple virtual machines (VMs). However each VM still contains a complete operating system as well as the application code. Containers abstract away from the operating system, isolating just the elements you need to run an application.

Google Kubernetes Engine

Google faced immense challenges scaling infrastructure to support millions of users on applications such as Gmail and Google Maps. In 2004, Google began using containers to power the backends of these applications. In 2013, they open-sourced LMCTFY (Let Me Contain That For You) which became the foundation for Dockers. In 2013, Google developed Borg, their own internal large-scale container system, which was eventually open-sourced, becoming known as Kubernetes. To give you an idea of how well Kubernetes scales, recent estimates show that Google launches over four billion containers a week to run their global services.

There are a number of key architectural components within Google Anthos:

Google Kubernetes Engine (commonly known as GKE): Deploys, manages and scales containers across multiple compute engineers pooled into a cluster.

Anthos clusters: Management of Kubernetes clusters residing outside Google Cloud Platform (GCP), on-prem, or other cloud providers (AWS & Azure) managed from a single pane of glass.

Anthos Service Mesh (ASM): Simplify service delivery, from traffic management, observability and mesh telemetry to securing communications between services across private and public clouds.

Anthos Config Management (ACM) : Allows uniformly consistent containers configurations across multiple clouds. It’s a secured, version-controlled repository for everything related to administration and configuration. Policy controllers can check, audit and enforce cluster compliance with policies related to security, regulations, or arbitrary business rules.

Attached Clusters: Single place to operate on clusters in other clouds. Leverage services such as Anthos Service Mesh and Anthos Config Management on EKS on AWS and AKS on Azure.

Learn more about Kubernetes .

Designed for Multicloud

Portability and scalability are among the key appeals of containers and Kubernetes, and arguably Google Anthos’s most unique feature is its ability to support multiple cloud infrastructure formats including:

- single public cloud and on-prem

- multiple public clouds and on-prem

- multiple public clouds.

Anthos allows you to manage all these different scenarios as if they were a single cloud, and that’s where Megaport can help. Both Anthos and Megaport are cloud-agnostic, so customers can move their containers and workloads to whatever platform suits them. Similar to the Megaport’s Network as a Service (NaaS), Anthos gives their customers the ability to move workloads around in real-time and scale up and down as they need.

Find out more about Megaport Cloud Router , our virtual routing service that provides private connectivity at Layer 3.

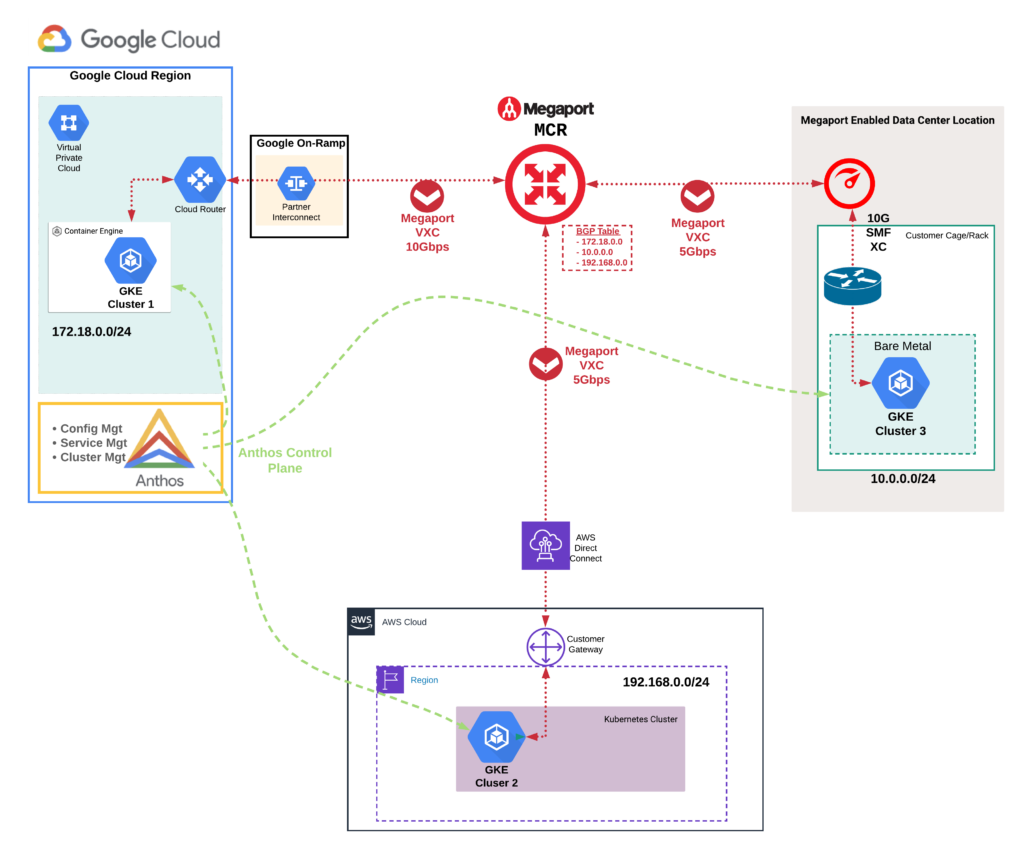

Megaport/Anthos Example

The diagram below shows an enterprise with three GKE clusters. The first running in GCP, the second in AWS and the third in their colocation facility. All three clusters are seamlessly managed using the Anthos platform hosted in GCP. Depending on customer requirements, whether it’s pure demand, low-latency or data residency workloads, data can easily be diverted to any of the three clusters via Megaport’s high-speed, layer 2 backbone. This design also utilises Megaport Cloud Router (MCR) as the routing hub, exchanging full BGP routes between the three locations. This enables all the GKE clusters to reach each other (both the control and data plane) without having to hairpin back to the colocation facility or travel over the public internet via a VPN.

For example, an application can have its front-end hosted in GCP via an L7 ingress, while its backend components are hosted on prem or in other clouds and managed with ASM. An alternative design could use replicated environments in GCP and in AWS, with external traffic ingressing through GCP via Traffic Director and then be delivered to an AWS endpoint via MCR’s private connection.

Summary

Gartner predicts that by the end of 2022, 75% of global organisations will be running containerised applications in production. Together, Google Anthos and Megaport can provide a solid foundation for the growing multicloud containerised world.

Megaport is one of Google’s leading global interconnect partners. No provider has more GCP on-ramps than us in North America.

Learn more about Google Anthos and Megaport

To learn more about Google Anthos, visit them here . You can also read more about Megaport’s partnership with GCP here .